From Hardware to Software

Computers became more powerful in the 1990s and the emulation of analog circuits became popular. In 1996, Steinberg created a plug-in standard called VST, which was adopted by their Cubase software. In1999, VST2 was released and it could now support instruments. VST's UI was reminiscent of a real machine and had a strong visual impact. Many manufacturers began to use VSTs as plug-ins. VST plug-in development vendors also began to appear all over the world. Since plug-in development can be done by individuals, there are probably hundreds of vendors today.

Here are some of the earliest plug-ins that are still available for free download from Steinberg. They have been maintained and are available in 64-bit versions, so they should work with many DAWs.

Neon: The world's first VST instrument. It is an analog-like synth with a basic two-oscillator configuration, but it can only be detuned and the oscillators cannot be programmed separately. The fact that it was the first VSTi makes it more valuable than the actual sound quality.

VB-1: At the time, I thought the virtual Stingray-like visuals were kind of a joke. The ability to change the position of the pickups and the position of the player made it interesting in a way that only software can do, but the sound output was disappointing. Now that I am touching it again, it’s actually fun to play with as a sound source.

Model-E: It was our strongest feat modeling the Moog Model D Minimoog in the early days of virtual analog. It was visually appealing and became a model for later VST plug-ins.

Below is an advertisement from that time. The multi-timbral sound source is also a sign of the times. The reviews from around 2000 are often very critical. I guess people were expecting the real Moog.

Karlette: This was an effect, but it was a tape-like delay with four taps, and it was new at the time because it could be synchronized with the DAW's tempo.

I personally owned Cubase at the time, but I had the impression that it was still too much to do with software, considering the specs of my computer. However, since 2010, it has become commonplace to be able to use software synthesizers on a PC that can replace the actual machine. As a result, the demand for expensive hardware synthesizers has decreased, and today, software instruments are sufficient for music production.

CLAP New Plug-in Standards

Since 2000, several plug-in standards have been created for use with DAWs, and currently the most major plug-in is the VST that was mentioned above. However, since it is affected by changes in Steinberg's specifications, older versions are no longer available, and version upgrades have raised the bar for development, so it does not seem to be very well received. I have personally created plug-ins with VST3, and it was indeed difficult to grasp the specifications. The sample sources were not very well organized, and it took a long time to analyze them.

So in 2022, an open plugin standard called CLAP was created by Bitwig and u-he. It’s not too complicated and seems to be flexible, so we have high expectations for the future. Currently, DAWs that can handle CLAP are Bitwig, REAPER, and MultitrackStudio. Below is the Clap version of u-he Filterscape.

Steinberg may never support CLAP in Cubase since they have their own dominant VST. What surprises me is that Avid, the ProTools company, supports it. If the industry dons make a move, the situation may change. By the way, Steinberg, Bitwig, and u-he are all German companies.

Modeling Techniques

Software-enabled synths are headed in different directions. Reproducing the great machines of yesteryear has been done since the beginning, but in recent years, the processing power of these PCs is being used to fill in the gaps between them and the actual machines. The behavior of analog elements is particularly emulated, and the accuracy is improving year by year. Physical sound sources, which are computationally expensive, are also becoming more realistic. In this way, it appears that efforts are being made to refine the synthesis methods of the past by reproducing or combining them in software in a precise manner. Conversely, completely new synthesis methods seem to be less likely to emerge. Where will software synthesis go from here? Here are some thoughts that come to mind.

Software Synths Have Become More and More Sophisticated

As synths become more sophisticated, they require more parameters and specialized knowledge, making them difficult for many users to understand. The process of creating tones is no longer easy, and it is not uncommon to find synths that require as much knowledge as creating one using a single musical instrument. The current theory is to have a large number of presets created by professional sound designers. The end-user, on the other hand, can simply select his or her favorite tone from the presets, but the sheer volume is becoming too much work. It seems to me that this trend will probably continue without any end in sight. The act of choosing from a myriad of options is everywhere nowadays, and the way we make that decision seems to be becoming more and more important.

Hardware in the future?

It may not be long before musical instruments become personal computers and dedicated tools for adding plug-ins, as evidenced by Native Instruments' NKS standard. However, many people feel that it is difficult to use a general-purpose interface for PCs. By integrating it with the hardware, it should provide a more direct feel. In this way, the boundary between software and hardware will become more blurred, and the era of distinction may end. Of course, the traditional style will remain, but the mainstream will flow toward more convenience.

Native Instruments / Kontrol S49 MK3

Programming Synth Size

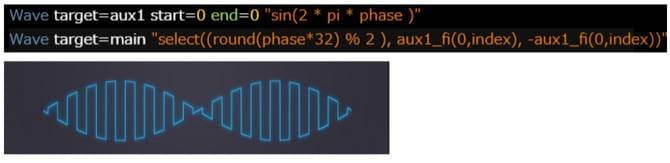

Acoustics, software, and mathematics are good friends, so a mathematical approach to sound creation is a natural part of development. However, I have not seen any attempt to make this attractive to users. In this situation, u-he has created a scripting language for generating waveforms (wavetables) called the uhm language. When I tried uhm, it was effective to reproduce a little chiptune sound. I think it will be useful not only for music, but also for research on various sounds. Below is something I wrote with the uhm script, but I think it would be difficult to do with a regular subtractive synth.

Synthesizing with Graphic Processing

When waveforms are graphically processed, they can be neat and orderly, or the audible and visual images can match. Traditionally, the only waveforms that could be artificially created were simple waveforms, but now even complex waveforms can be created in most cases. Arbitrary waveforms can be generated from overtone components, but there is no problem in creating them visually. This may open up possibilities in conjunction with graphics software for visual work.

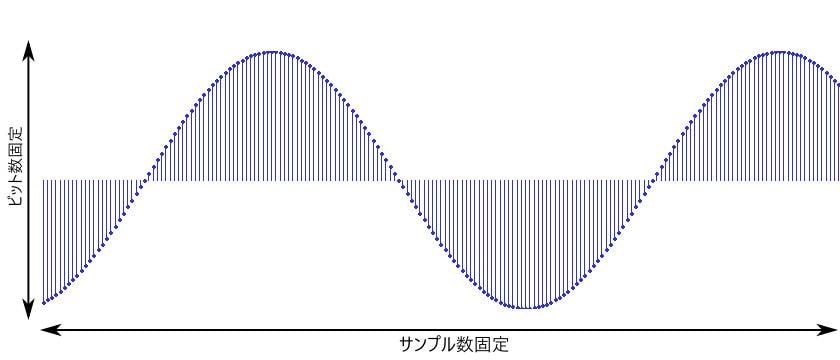

Currently, the mainstream style is to process PCM data, but as the amount of data handled increases, the capacity becomes overloaded and inflexible. It is similar to raster data that handles pixels in images. In the image world, there is a data format called vector data, which is composed of points and lines independent of resolution. Vector data can be made smaller and more flexible, and is used in different ways depending on the application. Raster and vector data complement each other. Something similar is likely to happen in the world of music.

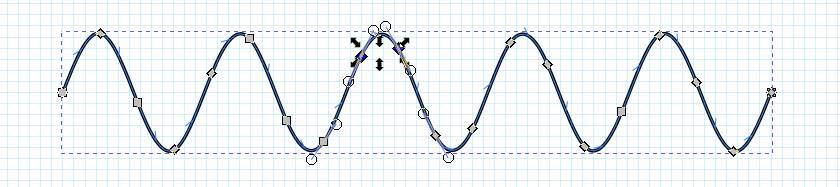

This is one of u-he's efforts to make it possible to input and output waveforms as vector (SVG) data. Although no usable product has been released yet, it will be used in zebralette3, which may be available by the end of 2023. SVG is a standard vector graphic format that is already supported by many browsers.

The figure above is the current mainstream way of handling waveforms. The number of samples and the number of bits, which is the amplitude, are fixed and processed within that number. The image is that points are always set in a staircase-like manner within a fixed resolution. As the number of samples and the number of bits are increased, the amount of data increases accordingly. Also, changing the sampling frequency or amplitude will cause degradation. It can be said that the problem is similar to that of raster data of images.

On the other hand, u-he's thinking vector (SVG), as shown in the figure below, has stepless resolution and can reduce the amount of data by drastically decreasing the number of control points. In addition, the ability to freely handle curves makes it strong against deformation of waveforms, such as morphing. When the final sound is made, it is replaced by a normal sample, but until just before, it is processed as a vector like this.

The Future of Synthesizers

It is clear that the hardware has become software, which is inexpensive and easy to handle, and it’s one of the factors that have led to its widespread use. Synthesizers are no longer special. In addition, sound quality has improved over the past decade to the point where they can replace hardware.

As a developer, I am most interested in new methods of speech synthesis, but in general, the synthesis method is not so important because it is all about the sound. In fact, I believe that many presets are made by combining several synthesis methods.

Even if new synthesis methods emerge in the future, I don't think that they will replace the conventional synthesis methods. It seems that each synthesis method has matured and we have entered an era of combining together. Even if a new synthesis method is developed, it will obviously be disregarded for its substance as one of the means to create presets. It seems to me that combining synthesis methods according to their purpose will become the norm, and the process will become more important.

After the above evolution, the distance between the developer, the sound designer, and the user will grow further apart. As a result of the sophistication of each, specialization is inevitable. However, I would like to mention that those who can straddle both sides can certainly create something new by actively utilizing their position.

The “sound & person” column is made possible by your contributions.

For more information about contributions, click here.

![[2026] Recommended Items for Music Programming - DAW/Software Instruments/Plug-ins](/contents/uploads/thumbs/2/2020/11/20201102_2_11495_1.jpg)

定番DAWソフトウェア CUBASE

定番DAWソフトウェア CUBASE

iZotopeが手がけるオールインワンDAW “Spire”

iZotopeが手がけるオールインワンDAW “Spire”

DTMセール情報まとめ

DTMセール情報まとめ

USB接続MIDIインターフェイス

USB接続MIDIインターフェイス

USB接続対応のMIDIキーボード

USB接続対応のMIDIキーボード

DTMに必要な機材

DTMに必要な機材