In the previous issue, I traced the history of electronic instruments and gave a light introduction that went up to the Moog synthesizer. In this issue, I will explain the principles of the main electronic instruments, but first, let’s review the difference between analog and digital.

Electronic technology started with analog circuits, with digital technology becoming more common only in the late 1970s; the Moog synthesizer was first introduced in 1964, so digital technology was not quite ready to be applied to musical instruments. Therefore, it was usually called a synthesizer at that time. From today’s perspective where digital technology is the norm, synthesizers at that time were 100% analog circuits, so it was tempting to call them analog synthesizers to emphasize the fact that they were not digital.

Analog synths are sounds made with analog circuits

“Analog synths” means that the sound is created with analog circuits. The components that make up an analog circuit are regular general parts such as transistors, op-amps, inductors, capacitors, and resistors, as shown in the picture. An op-amp is a convenient circuit package with several transistors inside. It’s important to note that the circuit is only analog.

Audio signals created by analog circuits are continuous waveforms in both time axis and level. Also, electrical signals travel at the speed of light, so any complex circuit has the advantage of instantaneous response. I think this is the greatest feature of analog. This is commonplace for an electric circuit, but digital circuits cannot do this.

The problems with analog circuits are that they are susceptible to noise, the sound degrades with each processing and they are difficult to make compact. And, they require many components, making them expensive. Analog circuits are not suitable for inexpensive, compact, and high-functionality products, which is why they have been replaced by digital circuits.

Digital Circuits

Digital circuits handle audio signals as discrete signals that are not continuous, and information is sent in binary numbers (e.g., 5V = 1, 0V = 0). Neither the time axis nor the signal level is continuous, but it’s represented at regular intervals in a way that looks like a staircase. For this reason, digital signals inevitably have a resolution. In terms of sound, a resolution that is not unnatural to humans is used. The reason why we have to go through such trouble is because we can accurately convey and process sound without degradation by replacing it with a numerical value instead of handling the sound itself.

As an example, a CD has a sampling frequency of 44.1 kHz, and time is handled in units of 1/44,100 of a second. This means that the audio between samples will be missing. Also, the handling of the volume equivalent sound is 16 bits (96 dB) and is expressed from -1 to 1 in 65,536 steps. Again, the original sound is rounded off to the nearest bit. This resolution was adopted for CDs because it was considered to be a resolution that could be used for music appreciation, despite its deficiencies.

The figure below shows that the CD specs are for one cycle of a 441 Hz sine wave, and that 100 samples make one cycle of sound.

Incidentally, early digital instruments have a low resolution of 20-30 kHz and 8 bits, which seems rather coarse; if the values are about half those of a CD, they would be insufficient for music appreciation. The reason why low resolution was allowed for instruments was due to the various post-processing processes.

Today, the trend is toward even higher resolution, with sampling frequencies as high as 96 kHz becoming commonplace and equipment capable of recording with a 32-bit float. A 32-bit float is a magical standard that can handle anything from 0 to infinity, but if you take it for granted, you could be in serious trouble. The resolution is 32 bits and it’s just labeled so that it can be used from 0 to infinity. In other words, the 4,294,967,296 steps of 32 bits are allocated to plus and minus, and the closer to 0, the higher the resolution. Although the 32-bit float has become a hot topic in recent years, I have not seen many articles that go into it, so I would like to explain it in a column sometime soon.

The photo below shows an IC (FM sound source) and a crystal oscillator (silver component), which are indispensable for digital circuits. ICs used in digital circuits look similar to operational amplifiers in analog circuits, but they operate in steps based on the frequency of the crystal oscillator. Recently, there are many all-in-one types of ICs in which various components such as crystal oscillators are packed inside the IC. IC chips are often made into dedicated chips because they allow complex circuits to be made into extremely small sizes. One of the advantages of digitization is this integration. However, since they are no longer general-purpose parts, they are also parts that cannot be replaced once they are no longer manufactured.

Furthermore, when dealing with sound in real time, digital has a major problem. The delay is inherent in digital. Since digital computation is done step by step, a large amount of computation inevitably results in a slight delay. The use of emulation-type effects is also popular in real-time performance, but it is important to note that the delay problem exists to a greater or lesser extent. The problem is that a slight delay causes a troublesome phenomenon called “habituation”. As an extreme example, a delay of 20 msec can be compared to the position of an electric guitar amp (analog), which is about 6.8 m away from the amp. If you practice in this environment every day and get used to it, you will be confused by the overly responsive response when using equipment with a 2msec (about 0.7m) delay. In the past, the opposite of this phenomenon often occurred, but I did not expect it to be reversed. In any case, it goes without saying that minimal delay is desirable.

From Analog to Digital

Although analog is superior in terms of sound resolution and latency, its other shortcomings led many electronic devices to go digital in the 1980s for their convenience. Synthesizers, as is typical, were gradually converted to digital. It seems that most of the parts that could be replaced by digital devices have been digitized. This has led to high-quality, low-noise synthesizers with more advanced functions than we could ever hope to achieve.

However, since the year 2000, there has been a movement to revive the analog world, and its advantages are being reevaluated. I believe that in the future, the use of analog will spread to take advantage of its advantages in key areas.

Now that you have some understanding of the difference between analog and digital, in the next article, I would like to look at the flow of analog synthesizer (subtractive type) sound creation.

The “sound & person” column is made up of contributions from you.

For details about contributing, click here.

![[2026 Latest Edition] Choosing a Synthesizer/Popular Synthesizers Ranking](/contents/uploads/thumbs/2/2022/9/20220916_2_19446_1.jpg)

![[BOSS DD-2] ~ Analog-like digital delay ~](/contents/uploads/thumbs/5/2020/11/20201120_5_11619_1.jpg)

自分にあったピアノを選ぼう!役立つピアノ用語集

自分にあったピアノを選ぼう!役立つピアノ用語集

各メーカーの鍵盤比較

各メーカーの鍵盤比較

用途で選ぶ!鍵盤楽器の種類

用途で選ぶ!鍵盤楽器の種類

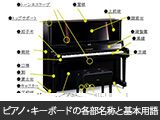

ピアノ・キーボードの各部名称

ピアノ・キーボードの各部名称

キーボードスタートガイド

キーボードスタートガイド

キーボード・ピアノ講座

キーボード・ピアノ講座